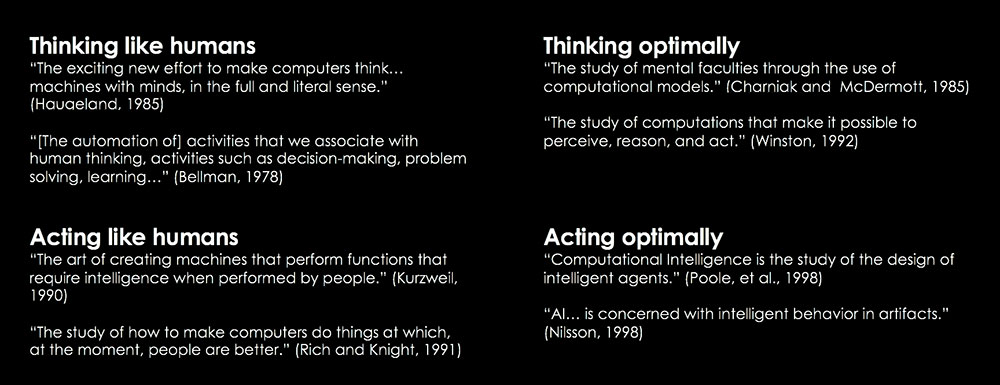

You might be surprised to know that work on Artificial Intelligence started shortly after the end of WW II. In fact, the term “artificial intelligence” was first coined in 1956 (see Dartmouth Workshop). In their book Artificial Intelligence. A Modern Approach. (Third Edition), Stuart Russel and Peter Norvig recount eight definitions of AI from the late 20th-century and organize them into four categories along two dimensions. One dimension is about thought processes and reasoning, and the other is about behavior. I’ve recreated a table from their text below but used slightly different language for the four category titles that I think is a little clearer.

If people who dedicate their careers to working in AI can’t agree on its definition, you can bet that their work will be highly varied and often divergent! Is AI about cognition? Or is it really just about action? Is it about emulating human thinking or action, or is it about thinking or acting optimally? Answers to these questions will change the way you approach AI, and those different approaches will require different methods. Russel and Norvig note in their book, that historically, all four groups have both disparaged and helped each other.

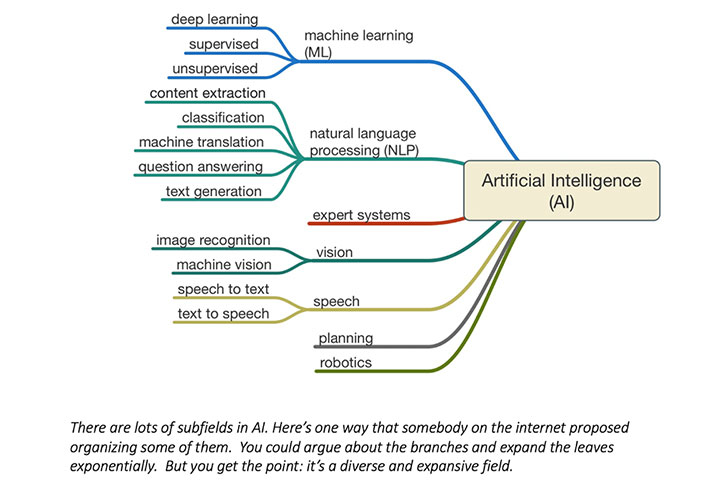

The Acting Like Humans quadrant is the “Turing Test Approach.” In 1950, Alan Turing proposed the “Turing Test” to provide an operational definition of “intelligence.” He said that a computer passed the test if it could take questions from a person and offer responses back that the questioner would believe had come from another person (and not a computer). In other words, if a person couldn’t tell that he or she was interacting with a machine, then it passed. Turing’s original test deliberately avoided physical interaction and called for the questions to be submitted in writing and responses returned in kind. However, the “Total Turing Test,” (TTT) which was later proposed by cognitive scientist Stevan Harnad, includes video and audio too. (Watch Ex Machina on Netflix for an example in action!) Russell and Norvig note that to pass a TTT an AI would need:

- Natural Language Processing to communicate conversationally;

- Knowledge Representation to store what it knows or hears;

- Automated Reasoning to reason over its knowledge to answer questions and draw new conclusions;

- Machine Learning to adapt to new circumstances and to detect and extrapolate patterns;

- Computer Vision to perceive objects in its physical environment; and

- Robotics to manipulate objects and move about.

They go on to say that these disciplines compose most of modern AI, and that Turing deserves credit for designing a test that remains relevant even 60 years later.

It’s clear that Turing helped guide the exploration of the field. However, real researchers haven’t spent much time trying to build something that can pass a TTT, and, in my opinion, rightly so. Studying the underlying principles of intelligence is more likely to yield real understanding than trying to emulate a person. (In fact, attempts at human emulation in advance of deeper understanding might yield more techniques for trickery than any real AI.) Makes sense, right? After all, we made progress on our quest to enable “artificial flight” when we stopped trying to emulate birds and, inspired by fluid mechanics, started using wind tunnels to understand aerodynamics. In any case, do we really want to create artificial intelligence that acts like us? Of course there would be applications, but it seems that this approach is more limited than alternatives.

The Thinking Like Humans quadrant is sometimes referred to as “Knowledge Based Artificial Intelligence (KBAI)” or “Cognitive Systems.” I took a class focused just on this area of AI. I spent a lot of time building AI agents that could solve Raven's Progressive Matrix problems and reflecting on how people think. The back and forth between reflecting on human cognition to yield insights for AI and reflecting on AI to yield insights about human cognition was super interesting. It was a great class.

Cognitive science brings together computer models from AI and experimental techniques from psychology to build testable theories about the human mind. There is much to be learned by understanding human cognition. And you could argue that AI needs to understand how humans think so that it can best interact with us. But is the goal of AI to emulate human thinking? Aspects of it, certainly, but that seems unnecessarily limiting to me. As great as human cognition is, it has shortcomings that we should seek to avoid in AI, and therefore this model seems useful but incomplete.

The Thinking Optimally quadrant is the “laws of thought” approach. Aristotle’s attempts to codify “right thinking” or irrefutable reasoning processes were the genesis of “logic.” Logicians in the 19th-century developed precise notation to express statements about all kinds of things and relations among them. Russell and Norvig note that “by 1965, programs existed that could, in principle, solve any solvable problem described in logical notation... The so-called logicist tradition within artificial intelligence hopes to build on such programs to create intelligent systems.” However, they further note that there are two big problems with this approach. First, it’s hard to state informal knowledge formally, especially when it’s less than 100% certain. Second, solving even simple problems with a limited number of facts with this approach could exhaust computing resources.

Last is the Acting Optimally quadrant. Russell and Norvig call it “The rational agent approach.” They say that a rational agent is “one that acts so as to achieve the best outcome or, when there is uncertainty, the best expected outcome.” We know that all computer programs do something. But rational agents are expected to do more: operate autonomously, perceive their environment, persist over time, adapt to change, and create and pursue goals.

Russel and Norvig argue that the rational agent approach integrates the inference capability from the ‘laws of thought’ approach since making correct inferences is often part of acting rationally. However, inference cannot always be required if you are to achieve optimal performance. Reflexes like quickly letting go of a hot pan you grabbed accidentally is more efficient than careful deliberation followed by slower action and therefore are an important part of acting optimally. Russell and Norvig also note that “all of the skills needed for the Turing Test also allow an agent to act rationally.”

I like the rational agent approach. It focuses on optimal behavior yet is informed by learnings from the other approaches. It’s a strong model for enabling “artificial intelligence” in the same way that we succeeded in enabling “artificial flight” --not focusing on emulation, but rather the goal of flying. In this case, it’s about producing optimal results. So the definition of artificial intelligence in this approach is intelligent behavior in artifacts. (Self-driving cars are a good example of the rational agent approach.) The other approaches to AI are interesting, useful and important; but for now, I think that the most immediate and practical applications of AI are going to come from understanding the general principles of rational agents and components for constructing them.

Business applications of AI (or any other technology) require that their value be clearly quantified. “New,” “Exciting,” and “Cool” don't pay the bills. Substantive investments must be about creating quantifiable value. At Allied Universal, we’ve used this view of AI to understand what's likely to happen at a client's site regarding safety and security incidents and then automatically suggest workflows to our security officers that drive quantifiably better outcomes including reducing accidents and crime (see https://www.aus.com/heliaus). Clients using our HELIAUS® platform have seen, on average, over a 20% reduction in safety and security incidents. This will likely improve as the data gets richer and the algorithms get more effective. HELIAUS® is reducing crimes and accidents—it’s important work!

While I like this for a working model and approach to AI, there is still the question: What is intelligence? I’ve thought about that a lot during my graduate work at Georgia Tech over the last few years. My thinking was a bit blocked until I separated “consciousness” from “intelligence.” Once I settled on those being two different things, my thinking about AI developed faster. At some point, as our understanding of intelligence grows, I’m sure we'll make meaningful progress on understanding the essence of “consciousness.” However, I think we should get self-driving cars working first, and leave that to philosophers, theologians, psychologists, and after-dinner drinks for now.

Large parts of this article were adapted from Artificial Intelligence. A Modern Approach. Third Edition (2009), which is a keystone of The Georgia Institute of Technology's Intelligent Systems PhD qualifier exam.

About the Author:

Mark Mullison, Chief Information Officer (CIO), Allied Universal

Mark Mullison, Chief Information Officer (CIO), Allied Universal

As Chief Information Officer (CIO) for Allied Universal®, Mark is responsible for both internal and client facing technology innovation. With more than 20 years of extensive experience in product development, information technology and global operations, he has a proven track record of integrating people, processes and technology into world-class products and operating environments that deliver measurable ROI. Mark received a bachelor’s degree in Computer Science from the University of Michigan and has completed the Essentials of Management Program at the Wharton School of Business. He received a master's degree in Computer Science from the Georgia Institute of Technology with a specialization in Interactive Intelligence.